The symposium “Analogue | Digital | Integrated: Comtetency-based assessment of the future” [Analog | Digital | Integriert: Kompetenzorientiertes Prüfen der Zukunft] took place on 4/5 May 2023 and can be summarised by the motto “To pastures new!” in two respects: 1) from an organisational standpoint, due to the combination of a one-day retreat on a ship from Regensburg to Passau with a “classical” conference with keynotes and panel discussion at the University of Passau, and 2) with regard to content, due to the intense discussion of sustainable concepts for concrete electronic examination scenarios.

Dazzling sunshine and reeling decks – you probably wouldn’t immediately associate those with a symposium, but it was precisely that scene that the “ScholarSHIP” offered on 4 May 2023, setting off on a cruise down the Danube from Regensburg to Passau for a conference of a special kind. Over 100 lecturers in 27 teams from 12 universities and universities of applied sciences boarded the ship. In their luggage they had problem statements pertaining to their particular exam scenarios which they had submitted in the run-up. There was also a team of experts on board to provide advice and practical help with didactic, legal and organisational aspects.

Competency-based designing of digital examinations

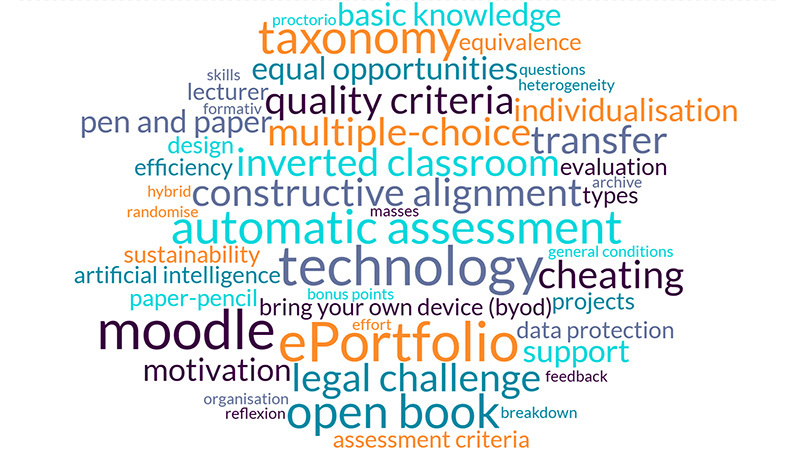

Here, the topics ranged widely, with questions concerning everything from e-portfolios to in-person digital written examinations to required previous knowledge to digital archiving. Figure 1 shows a word cloud containing the main key words that arose from the questions dealt with.

The topics were systematically analysed and worked on in four sprints. It quickly became clear that it is very difficult to convert analogue examinations directly into the digital space as they are. Therefore, the following question needs to be asked with regard to every exam situation: where am I prepared to accept changes and to what extent?

The challenges are great

In the initial analysis of the questions submitted it became clear that there are technical and organisational hurdles to overcome in many places that hamper the introduction of electronic examinations across the board. Two points in particular were mentioned repeatedly: (1) in many places there aren’t any exam halls big enough to hold examinations in popular degree courses with large numbers of students. At the same time, the Wi-Fi connection in lecture halls is often not good enough to be able to hold electronic examinations where students bring their own devices. (2) Lecturers often don’t get enough technical and didactic support with converting analogue exam formats to digital ones.

Despite intense discussion with the experts, there are still no solutions to many questions, for instance

- how do digital artefacts (e.g. videos) have to be archived?

- How can visiting lecturers be involved in the process in the case of examinations that require a great deal of development effort?

- What (new?) skills do lecturers need when it comes to digital examinations?

There are diverse solutions possible

Practicable solutions were developed for most of the questions, however, including the following three.

In electronic examinations, how can I create comparability regarding degree of difficulty and learning outcome when it comes to randomised tasks?

For electronic examinations lecturers often fall back on a question pool. In this case, it is not easy to guarantee that the tasks set will actually be comparable.

Staff at Ruhr University in Bochum have developed the following approach: they assign the individual tasks to different learning outcomes based on a matrix. In addition, the tasks are categorised according to their respective levels in a learning outcome taxonomy. This means that all students can be assessed using tasks of comparable complexity by way of cluster samples.

How do I motivate students to learn and revise throughout the semester?

A team from the University of Bayreuth busied themselves with one of the fundamental problems with examinations: “bulimic” revision behaviour at the end of the semester.

They want to use exercise sheets to maintain students’ motivation to learn and revise consistently throughout the semester. The students get bonus points for tasks they have completed successfully, and those points are included in the exam result at the end.

How can I make performance assessments democratic?

One team from the Technical University of Munich came up with the following answer: collective exams. Here, students develop exam questions for other students. That way, the exam process is part of the learning process as well. During the selection and formulation of the exam questions, the students are not only given a chance to change their perspective but acquire new competencies themselves through the systematic recapitulation of the subject matter.

Beyond specific exam situations, the following solutions emerge:

- in many subjects, online open book formats with strict processing times are a viable way to assess more complex competencies (e.g. analysis of a qualitative study) in an application-based manner.

- In view of ChatGPT, many faculties are returning to in-person written exams in order to assess basic knowledge.

- E-portfolios seem very suitable as a competency-based form of examination but require huge curricular adjustment and a high student-staff ratio when it comes to marking exams.

A team from Offenburg University of Applied Sciences formulated two principles of the competency-based designing of electronic examinations that may be key:

- Keep it simple: start with small adjustments and keep on developing them.

- Talk about it: communicate with your colleagues and start talking about exams.

Assessment of the future and the role of AI – Observations from two perspectives

On the second day of the symposium at the University of Passau, Prof. Dr. Siegfried Handschuh and Prof. Dr. Sabine Seufert (both from the University of St Gallen) focused on the aspect of AI in connection with exams in their tandem keynote speech. Handschuh approached the topic from a technical standpoint, and after that, one of the questions Seufert dealt with was the competencies that the students need when dealing with generative AI.

AI has great potential when it comes to supporting assessment tasks

After a brief introduction to the technical functionality of generative AI, Prof. Dr. Siegfried Handschuh presented an assessment experiment using ChatGPT (Project “Ananda”) in his keynote speech. This is a laboratory study which was to be used to establish the extent to which short tasks can be assessed automatically using generative AI. Here, short answers given by students (N=2273) were assessed automatically by LLM (Large Language Model) as compared to two human appraisers. The result revealed that AI did not perform less well than the human appraisers overall. With a range of +/- one point, there is a 72.55% match between AI and the average of the two human appraisers.

For Handschuh, two results of this study are particularly interesting: 1. there is only moderate consistency between the two human appraisers; 2. overall, AI does not perform any less well than the human evaluators. Therefore, there is much indication that short tasks can be automatically assessed very well using AI, with the added “bonus” that AI is more deterministic: if you were to perform several cycles, AI would always give you very similar assessments – something that would not be so certain with human appraisers.

AI should be regarded as a partner

At the start of her talk, Prof. Dr. Sabine Seufert outlined the current status quo regarding AI in education. The way she sees it, the second wave of digitisation (AI, Natural Language Processing) is just beginning to take hold at universities, which affects goals and contents (computers as assistance systems or partners), methods and forms of learning (AI-based methods and tools for personalised learning, e.g. real-time feedback) and also learning environments (smart learning environments and mixed realities), for example. Seufert advocates a collaboration between humans and AI (hybrid intelligence). She sees great potential in a successful partnership between humans and machines in university teaching, where the humans continue to retain control (e.g. in assessing competencies, providing emotional and motivational assistance, or dealing with conflict) and the AI is there as an assistant to ease the load (e.g. in making routine decisions, simple recommendations regarding content, generating quizzes…).

When it comes to exams, a partnership structured like this could come to bear in the shared preparation of exam questions, AI-based marking aids or spelling checks, or analytics for adaptive coaching, for example.

Seufert subsequently focused on the aspect of competencies in dealing with generative AI:

- students need to acquire strategies for dealing with AI competently. This includes prompting skills, for example. The better the prompt, the better the output generated by AI (metacognitive knowledge).

- Generative AI systems support the building of expertise. By using AI you can not only define clearly what should be tested (e.g. knowledge related to the subject; writing skills) but also what is not so important. Here, students are explicitly encouraged to use generative AI systems. These can be used to assess competencies at a higher level, including by less important tasks being given to AI, for instance. Moreover, AI works like an assistance system that can be used to build on each individual’s expertise step by step in a way that is personalised.

To conclude her talk, Seufert commented on the trend that is currently apparent where lecturers are switching to oral exams. She explicitly argued against this switch on the grounds that this gives rise to new problems (including lack of objectivity and validity; halo effect) and to a change of genre that presents itself in the assessment of other competencies. Instead, she recommends that lecturers consider how they could adapt their exams so that the use of AI tools is possible or even welcome.

Conclusion

To end with the ship metaphor, here are two thoughts to finish:

- a lot of water will flow down the Danube before digital and competence-based exams can be implemented across the board;

- it feels as though AI has blanketed the university domain all of a sudden like a gathering thunderstorm that we cannot escape. It challenges university lecturers to react to the altered conditions by changing course, particularly – although not only – when it comes to the topic of assessment.

The project “QUADIS”, host of the symposium “Analog | Digital | Integriert: Kompetenzorientiertes Prüfen der Zukunft“, is sponsored by Stiftung Innovation in der Hochschullehre (innovation in teaching at universities foundation).

Suggestion for citation of this blog post

Bachmaier, R. & Hawelka, B. (2023, June 1). To pastures new! – A review of the symposium “Analog | Digital | Integriert: Kompetenzorientiertes Prüfen der Zukunft” [Analogue | Digital | Integrated: Competency-based assessment of the future]. Lehrblick – ZHW Uni Regensburg. https://doi.org/10.5283/20230601.EN

Regine Bachmaier

Dr. Regine Bachmaier is a research associate at the Centre for University and Academic Teaching (ZHW) at the University of Regensburg. She supports teachers in the field of “digital teaching”, among other things, through workshops and individual counseling. In addition, she tries to keep up to date with the latest developments in the field of “digital teaching” and pass them on.

Birgit Hawelka

Dr. Birgit Hawelka is a research associate at the center for University and Academic Teaching at the University of Regensburg. Her research and teaching focuses on the topics of teaching quality and evaluation. She is also curious about all developments and findings in the field of university teaching.